Introduction to Uncertainty Quantification 360

There are inherent uncertainties in the predictions of machine learning (ML) models. Knowing how uncertain the prediction might be influences how people act on it. For example, you may have encountered a weather-forecasting model telling you that there will be no rain tomorrow with 60% confidence. In that case you may still want to bring an umbrella when you go to work.

There is more to uncertainty in ML than the example above. Uncertainty is an important area of ML research, which has produced an abundance of uncertainty quantification (UQ) algorithms, metrics, and ways to communicate uncertainty to end users. Researchers at IBM Research have been actively working on these topics to enable critical transparency to ML models and engender trust in AI. You can read our papers.

We present Uncertainty Quantification 360 (UQ360), an extensive open-source toolkit with a Python package to provide data science practitioners and developers access to state-of-the-art algorithms, to streamline the process of estimating, evaluating, improving, and communicating uncertainty of machine learning models as common practices for AI transparency.

In this overview, we introduce some core concepts in uncertainty quantification. You can use the navigation bar above to navigate to the two questions we will answer:

- What capabilities are included in UQ360 and why?

- How can you use the uncertainty information produced using UQ360?

An interactive demo allows you to further explore these concepts and capabilities offered by UQ360 by walking through a use case where different tasks involving UQ are performed. The tutorials and notebooks offer a deeper, data scientist-oriented introduction. A complete API is also available.

Why do we include many different UQ capabilities?

Our goal is to provide diverse capabilities to streamline the process of producing high-quality UQ information during model development that: 1) estimate the uncertainty in predictions of an ML model, 2) evaluate the quality of those uncertainties, and if needed improve their quality, and 3) communicate those uncertainties effectively to people who can make use of the UQ information.

Let’s start with the first objective.

Supervised ML typically involves learning a functional mapping between inputs (features) and outputs (predictions/recommendations/responses) from a set of training examples comprising input and output pairs. The learned function is then used to predict outputs for new instances or inputs not seen during training. These outputs may be real values in the case of a regression model, or categorical class labels in the case of a classification model. In this process, uncertainty can emerge from multiple sources:

- The available data may be inherently noisy; for instance, two examples with the same feature profiles may have different outcomes. This is often termed aleatoric uncertainty in the literature. Going forward, we will simply refer to it as Data uncertainty refers to the inherent variability in the data instances and targets..

- The model mapping function may be ambiguous: given a set of training data, there may be different functions that explain them. This uncertainty about the model is termed epistemic uncertainty in the literature. We will simply refer to it as Multiple models (each model is characterized by a set of parameters) may be consistent with the observed data. The lack of knowledge about a single appropriate model gives rise to model uncertainty..

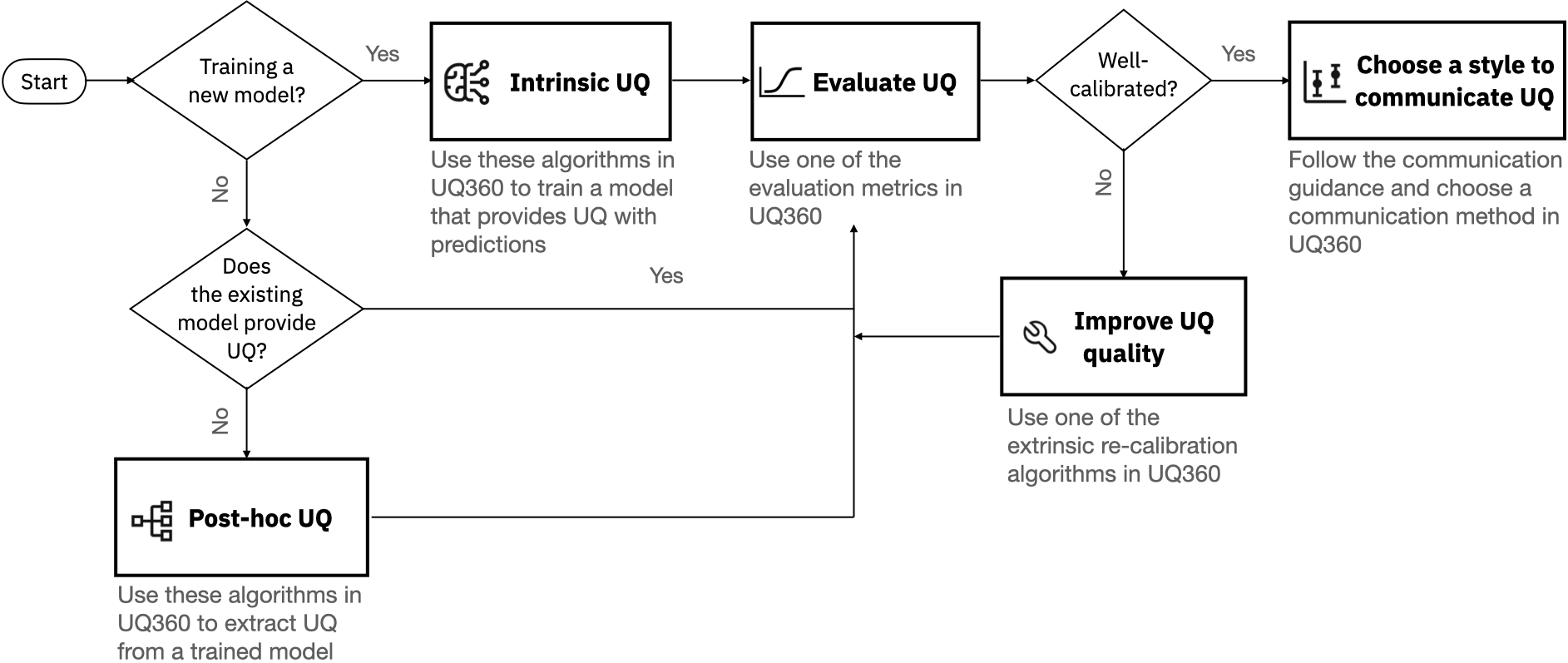

The UQ360 toolkit provides algorithms to estimate these different types of uncertainties. Depending on the type of model and the model development stage, different UQ algorithms should be applied. UQ360 currently provides 11 UQ algorithms, and a guidance on choosing UQ algorithms to help you find the appropriate one for your use case.

Moving on to our second goal: the quality of uncertainties generated by a UQ algorithm also needs to be evaluated. Poor-quality uncertainties should neither be trusted nor communicated to users. They should first be improved. To this end, UQ360 provides a set of metrics to measure the quality of uncertainties produced by different algorithms and a set of techniques for improving the quality of estimated uncertainties. You can read more about them in the guidance on choosing UQ algorithms.

Lastly, the best way to communicate uncertainty estimates depends on the type of the model, the way the UQ information is going to be used, and the recipients of the information. For a classification model like the one for weather-forecasting, the uncertainty is typically a score that is often referred to as confidence. For a regression model, the uncertainty could be communicated in various ways, including a range in which the predicted outcome can possibly fall, often referred to as prediction interval, or using visualizations. UQ360 provides a guidance for communicating uncertainty to help you choose an appropriate way to present uncertainty quantification.

How can you use UQ?

Assuming that you have chosen an appropriate UQ algorithm, generated high-quality uncertainty estimates and properly communicated them to the recipients, you may ask, how can they use such information?

For end-users of an AI system, UQ adds critical transparency to its predictions, helping them assess whether they want to trust (and accept) a particular prediction, whether they should gather more information, or resort to an alternative judgment. It is imperative to present UQ information in high-stakes applications such as healthcare, finance, and security to prevent over-reliance on AI and facilitate better decision-making. For example, estimated prediction intervals of a regression model's prediction can be used to better assess what the actual outcome may be, as shown in the interactive demo.

UQ is also useful to model developers as a tool for improving their model. By estimating model uncertainty and data uncertainty separately, UQ helps the developers diagnose the source of uncertainty and guide the model improvement strategy. For example, model uncertainty can be reduced by collecting more training data in regions of high model uncertainty. Data uncertainty can be reduced by improving the measurement process or creating additional features that account for the variability in the training data. See the interactive demo for a data scientist's experience using UQ.

Developing UQ capabilities for trustworthy AI

We have developed UQ360 to disseminate the latest research and educational materials for producing and applying uncertainty quantification in an AI lifecyle . This is a growing area and we have developed this toolkit with extensibility in mind. We encourage the contribution of your UQ capabilities by joining the community. We also encourage the exploration of UQ's connection to other pillars of Trustworthy AI, namely fairness, explainability, adversarial robustness, and FactSheets.